- Announcement,

- Experimentation

Leveraging AI for Smarter Experimentation: Multi-Armed Bandit

Understanding and engaging your audience can make or break your digital presence.

The art and science of experimentation have never been more crucial.

Traditional A/B testing has long stood as the backbone of website optimization and content personalization strategies. However, as we venture deeper into the era of AI, the limitations of conventional methods have become increasingly apparent.

This is why we are thrilled to introduce a smart traffic distribution to experimentation – multi-armed bandit (MAB), powered by Ninetailed AI.

The New Frontier in Experimentation

The multi-armed bandit approach takes its name from a hypothetical gambling scenario, where a player seeks to maximize returns by choosing the most rewarding slot machine (or "arm") among several.

In the realm of AI and experimentation, MAB algorithms apply this principle to manage and allocate resources between competing strategies based on real-time performance data.

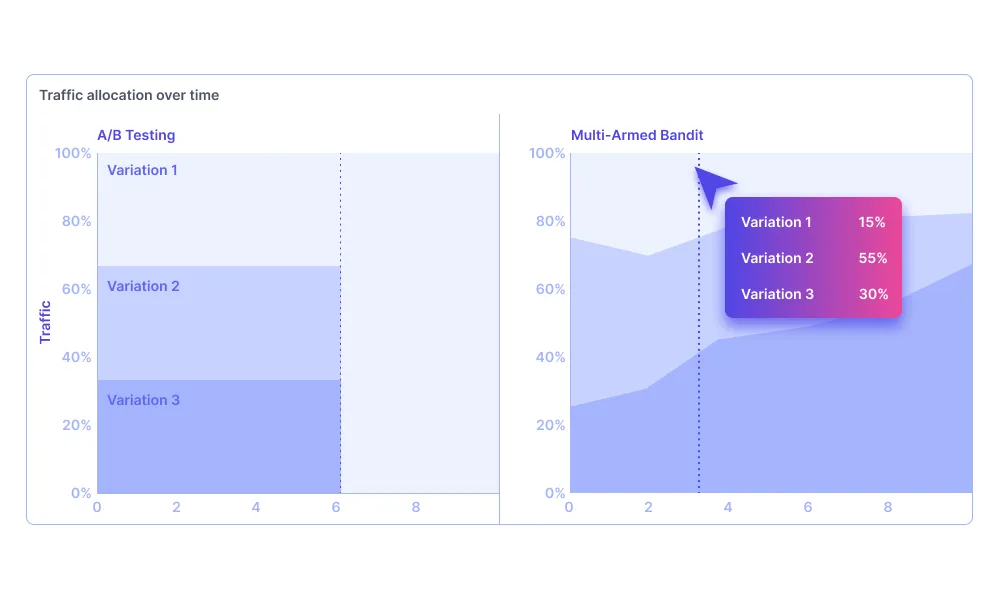

Unlike traditional methods, which divide resources equally regardless of outcome, MAB continuously adjusts its strategy to favor the most effective options. This smart allocation leads to more efficient and effective experimentation, optimizing every interaction with your audience.

Traditional A/B Testing vs. Multi-Armed Bandit

A/B testing, while invaluable, presents several challenges.

It requires setting aside significant portions of your audience to test each variation, potentially missing out on conversions.

It also operates on a fixed schedule, often leading to delays in implementing superior options.

Most critically, it lacks the flexibility to adapt to emerging patterns during the testing phase.

The multi-armed bandit model overcomes these limitations by adopting an adaptive learning approach. It intelligently distributes exposure amongst variations in real-time, based on their ongoing performance.

This not only accelerates the discovery of optimal content but also maximizes engagement and conversion rates throughout the testing process.

Impact of Multi-Armed Bandit in Action

Consider an online retailer testing promotional banners on its homepage.

With A/B testing, equal traffic is directed to two or more variants over weeks before determining a winner. Now, imagine applying MAB from day one: the algorithm quickly identifies a frontrunner, incrementally directing more traffic to this variant, boosting sales significantly before the test would traditionally conclude.

Or picture a media site experimenting with headline variations to increase article views.

Through MAB, the site dynamically shifts exposure toward the headlines, driving the most engagement, elevating overall viewership, and reducing bounce rates far more efficiently than static A/B tests.

Take the Next Step in Experimentation

With Ninetailed's multi-armed bandit feature, the power of AI-driven experimentation is at your fingertips.

We invite you to explore the bounds of what's possible when you're not just reacting to analytics post-experiment but actively shaping user experiences in real-time.

Are you ready to revolutionize the way you engage with your audience?

Request a demo today and discover how Ninetailed's multi-armed bandit and other AI capabilities can transform your digital strategy through smarter, more responsive experimentation.

Unleash the full potential of your content and customer data. With Ninetailed AI Platform on your side, the possibilities are endless.

Get in touch to learn how Ninetailed personalization, experimentation, and insights works inside your CMS

![4 Benefits of Headless A/B Testing [with Examples from Ace & Tate]](https://images.ctfassets.net/a7v91okrwwe3/1rAE9Eod5ybWUHtc9LEMLo/694bd8b14495713285c6564bd4c57386/B_Testing_-with_Examples_from_Ace___Tate-.jpg?fm=webp&q=75&w=3840)