- Experimentation

A Step-by-Step Experimentation Guide for Contentstack

No business wants to leave money on the table. And that's what can happen if you're not constantly testing your website to see how you can improve your conversion rates. As the world of online marketing continues to evolve, so too do businesses' strategies and tactics to increase their conversion rates. One of the most effective methods for doing so is A/B testing.

A/B testing is a technique that allows businesses to compare two versions of content in order to see which one performs better in terms of conversion rate.

One of the great things about A/B testing is that it can be used to test pretty much anything on your website or app, from the headline of an article to the color of a call-to-action button.

In this comprehensive guide, we dive into the details of A/B testing inside Contentstack, illustrating how you can maximize user engagement and conversion rates through a successful experimentation strategy. Whether you're a seasoned pro or just getting started, we'll break down the process into manageable steps, providing actionable insights that will help you leverage Contentstack to its fullest.

Common Barriers to Successful Experimentation Strategy

Before we dive into the details of experimentation for Contentstack, it's important to understand the common barriers that often stand in the way of a successful experimentation strategy.

Though experimentation is a powerful tool for enhancing user experience and driving conversions, many businesses struggle to implement it effectively. This could be due to a lack of unified workflows, testing velocity, or difficulties in managing and analyzing data.

By understanding these obstacles, you'll be better equipped to tackle them head-on and create a better digital experience that not only meets but exceeds your audience's expectations. So, let's embark on this journey of discovery and optimization and unlock the true potential of your Contentstack website.

Developer Dependency

Developer dependency is one of the common barriers to a successful experimentation strategy. This term refers to the reliance on developers or engineers to implement, run, and manage experiments.

Here's why this can be a problem:

Limited Availability: Developers often have their hands full with core product development tasks. If they are also responsible for running/supporting experiments, this could result in delays or even reduced quality in both areas due to divided attention and time constraints.

Skill Set: While developers are adept at building products, they may not have specialized knowledge in designing and interpreting experiments, which combine elements of user experience design, statistics, and behavioral psychology.

Prioritization Issues: In many organizations, experiments can be viewed as secondary to product development. This means that testing could be de-prioritized when developers are under pressure to deliver product features, leading to less frequent or lower-quality testing.

Workflow Silos

Having workflow silos is another common barrier to a successful experimentation plan.

In modern organizations, different departments and teams need to interact and collaborate effectively to achieve a common goal. However, when the tech stack is outdated or unable to integrate with each other, it can create data and workflow silos between teams, leading to disjointed workflows and wasted time and resources.

For example, imagine a company where the marketing department uses one tool for customer engagement while the sales team uses another for customer relationship management. Without proper integration, marketing and sales teams might not have access to each other's data, and this can lead to missed opportunities and a lack of coordination when it comes to effective experimentation for different audience segments.

Measurement Challenges

How do you know if your A/B testing efforts are working?

This is one of the major challenges for marketers today.

Measuring the results of your testing efforts can help you determine what's working and what needs to be improved. But measuring metrics can also be a challenge, especially when you're dealing with all the data that's available today.

By taking the time to measure your results carefully, you'll be able to make better decisions about how to show your content and improve your overall business performance.

However, measuring the success of testing initiatives can be a daunting task. There are a number of factors to consider, including which metrics to use and how to track them.

Testing Velocity and Scalability Challenges

Having a robust testing rhythm enables companies to quickly identify what works best for their target audience, adapt their strategies accordingly, and ultimately deliver more relevant and engaging experiences for their customers.

A strong testing velocity allows businesses to stay ahead of the competition by continuously innovating, refining, and improving their offerings.

But when it comes to scaling the experiments, many businesses hit a wall.

Why is testing velocity such a challenge for companies?

Resource Constraints: Conducting tests requires time, personnel, and sometimes even financial resources. Companies may not always have these resources readily available, especially smaller businesses or startups. This can slow down the testing process significantly.

Technical Complexity: Setting up and running tests, especially those involving technology (like A/B tests on a website), can be technically complex. If a company lacks skilled personnel who understand how to conduct these tests, it can lead to delays.

Data Analysis: After a test is conducted, the data needs to be analyzed to yield usable insights. This analysis can be time-consuming and requires a certain level of expertise to ensure accuracy.

Decision-Making Bottlenecks: Sometimes, the decision-making process within a company can slow down testing velocity. For example, if every test needs approval from several different stakeholders, it can significantly delay the testing process.

Fear of Negative Impact: Some companies may hesitate to run tests frequently due to fear of potential negative impacts on the user experience or business performance.

How to Create Experiments Inside Contentstack (And Overcome Common Barriers to Successful Testing Strategy)

Step 1: Define Your Audience

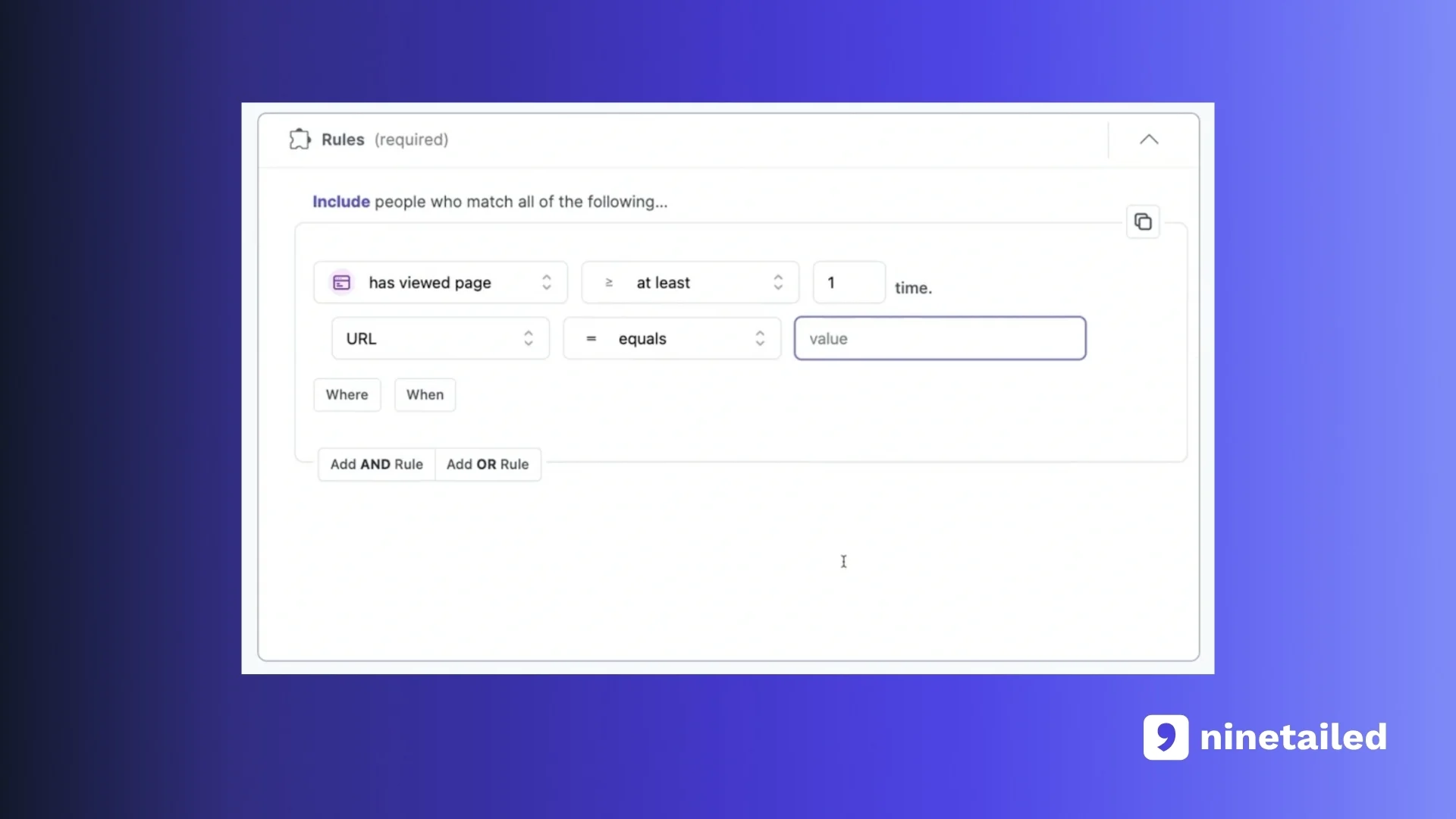

The very first step of a successful testing strategy is audience segmentation.

Once you connect the Ninetailed with Contentstack, creating audience segments inside Contentstack with Ninetailed is very easy and straightforward for marketers, campaign managers, and content editors — it’s just like adding a new title or image to your homepage hero. All thanks to Ninetailed’s seamless integration into Contentstack.

With Ninetailed’s audience segmentation capabilities, you have full control over your customer data. You can easily define your audience segments based on:

page view

page property

paid campaigns and UTM parameters

referral

events

traits

location

device type

Moreover, since Ninetailed is built on composability and MACH principles, enabling it to integrate with different customer data sources such as CDPs, CRMs, e-commerce data, intent data, firmographic data, data warehouses, etc. and with Ninetailed’s native integrations, you can also define and enrich your audience segments based on the customer data you have from other sources, such as:

Albacross — firmographic data provider

Shopify, BigCommerce, Commerce Layer, etc. — shopping data via Zapier

HubSpot — first-party CRM data via Zapier

Segment — first-party data in customer data platform

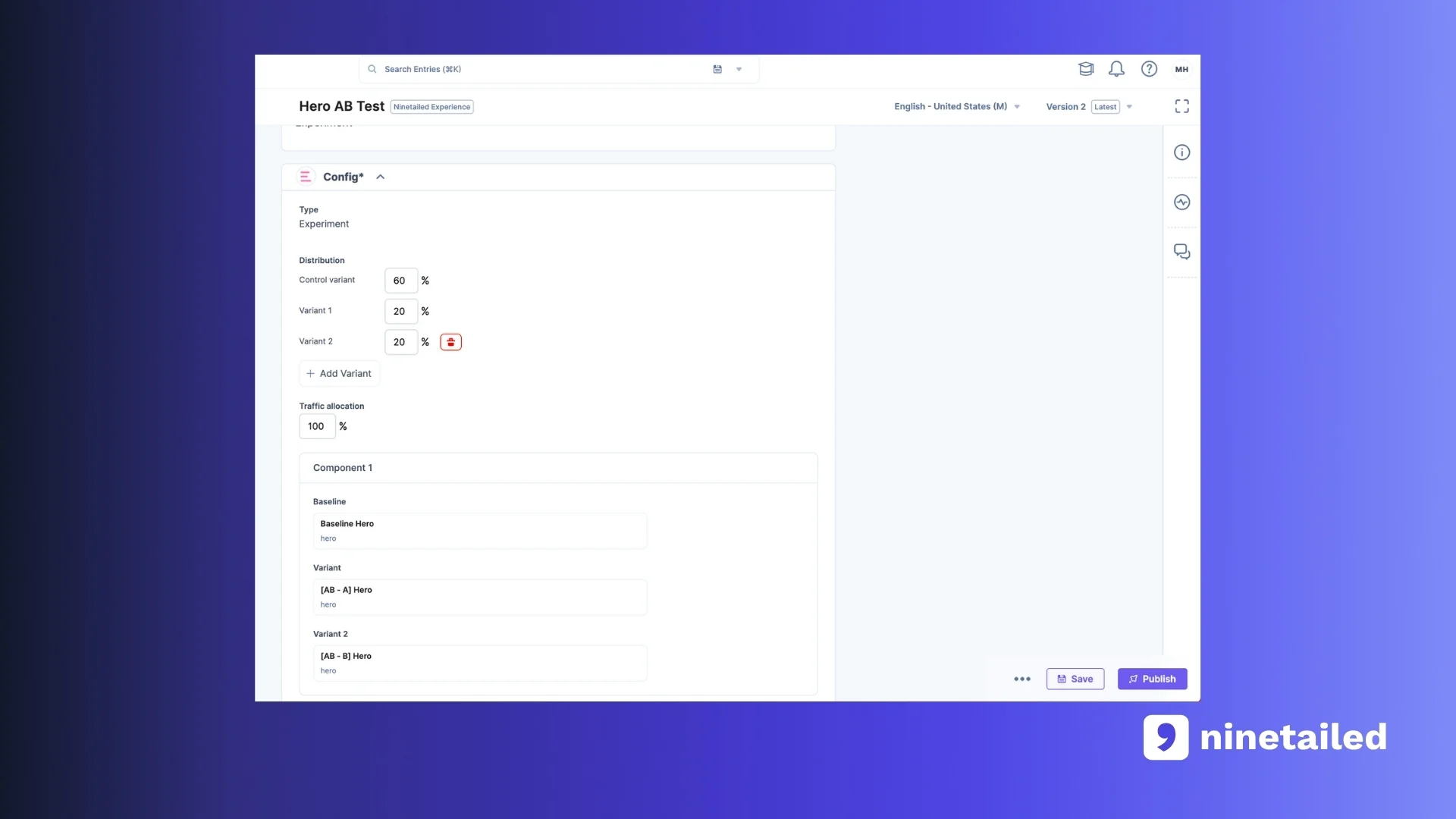

Step 2: Create the Experiments

Once you have the audience segment, the next step is to create the experiment for the audience segment. Very similar to audience segmentation, experiment creation also happens inside Contentstack — enabling you to test any content type you have.

Before setting the baseline, you can define the fundamentals of the experiment:

Number of variants you are going to test

The percentile distribution of users that are part of the audience segment of the experiment to control group and variants

Traffic allocation of the audience segment

After defining the fundamentals of the experiment, you need to select the audience you created earlier. Here, you can select all visitors or a specific group of visitors for testing.

The next step after selecting the audience is setting the baseline content. Once you set the baseline content, the next step is setting the variant.

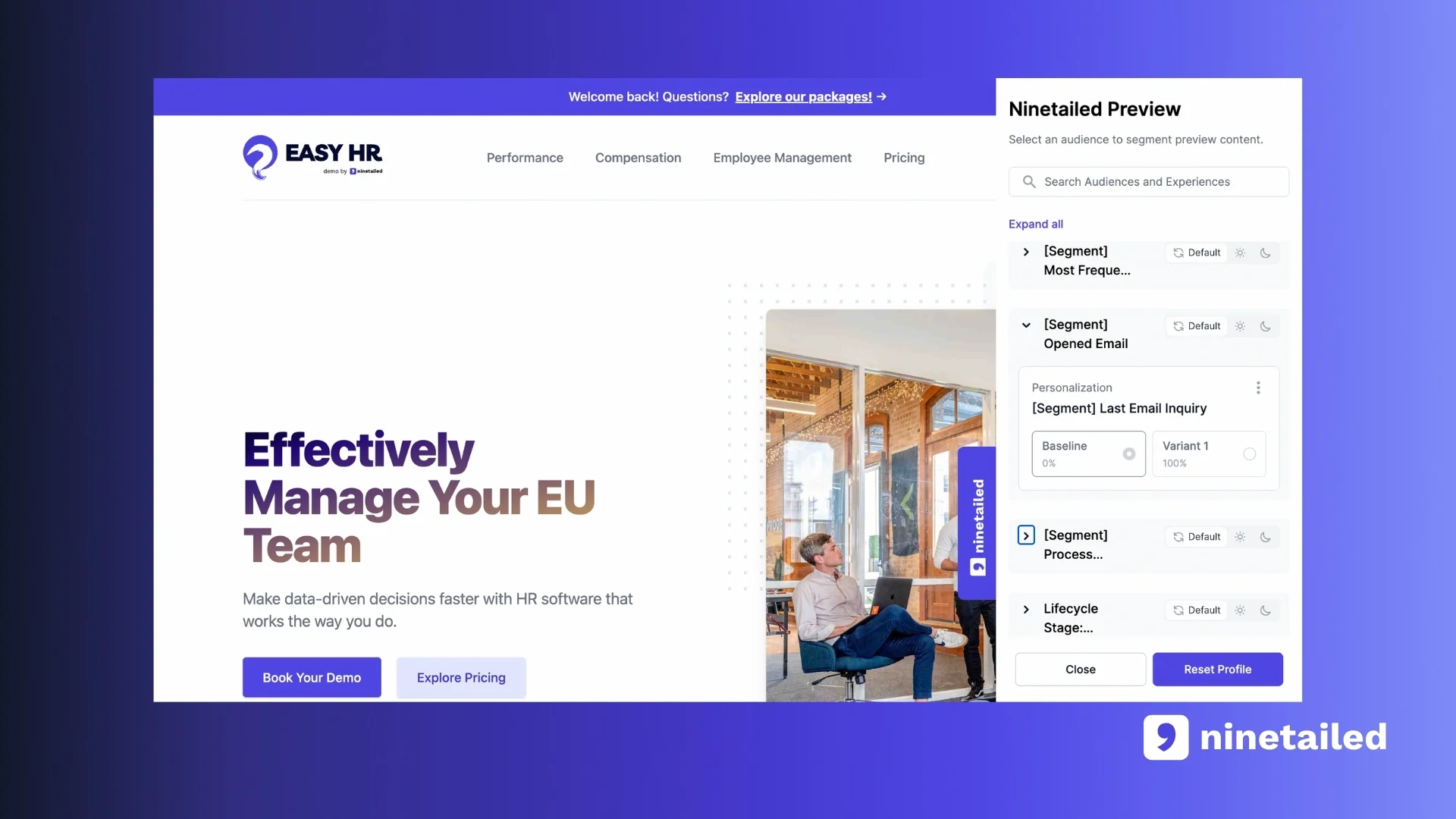

Since experiments can be for specific audience segments and these audiences are based on various conditions, previewing an experiment can be a challenge. This is where Ninetailed’s Preview Widget for experiences comes into play.

With Ninetailed’s Preview Widget, you can preview all of your experiments. All you need to do is:

Choose the audience from the list

Select the experiment you want to preview

Step 3: Analyze the Results

Up until this point, you read how to define audience segments, create experiments inside Contentstack, and preview all the experiments you created.

How do you know if your experiments are working?

This is one of the major challenges for marketers today. However, with Ninetailed, you have everything you need to segment your audience, create the experiments, and analyze the results.

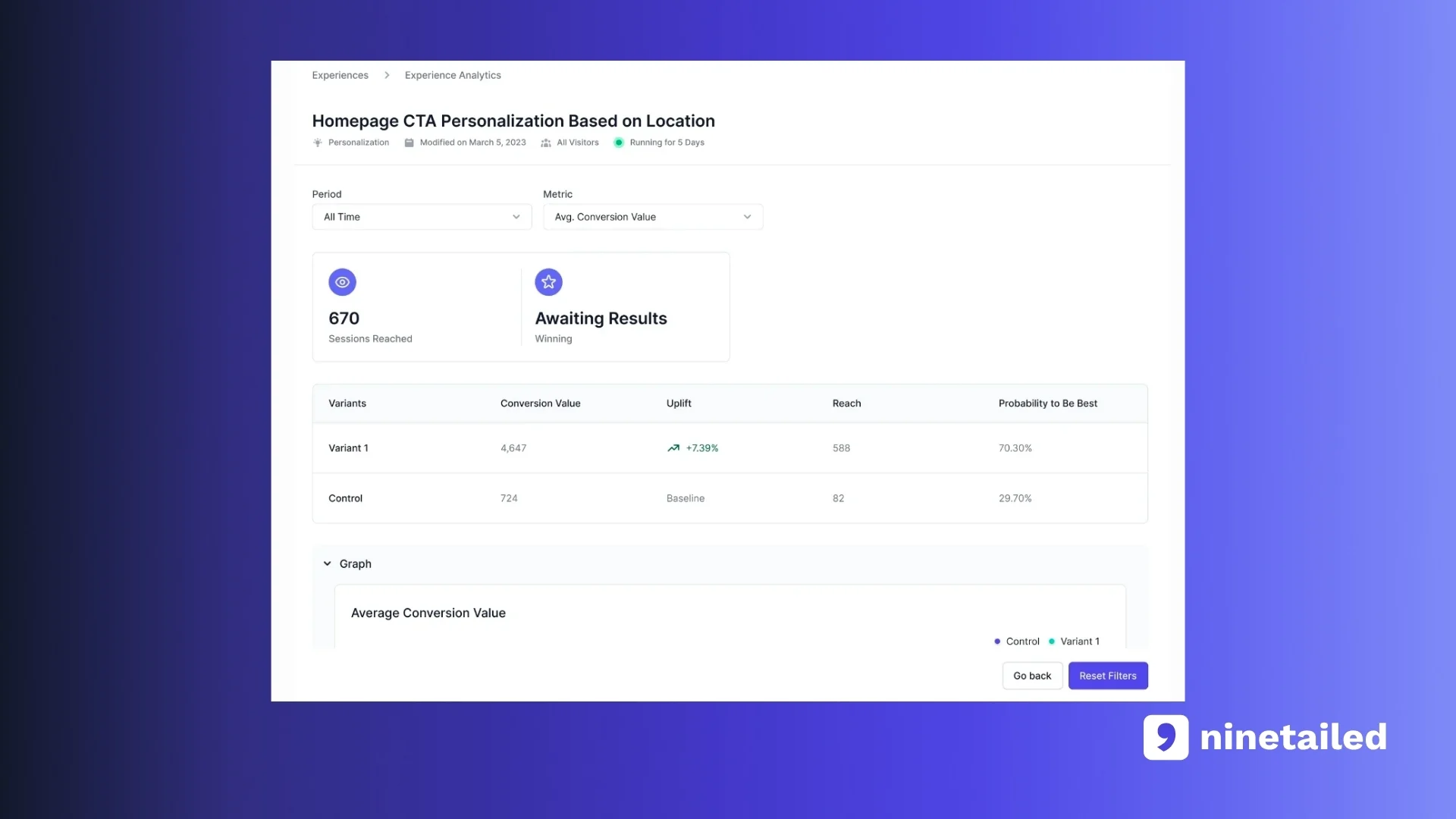

With Ninetailed’s experience insights, you can easily:

Understand the impact of experiments on conversion value and conversion rate

Display the conversion uplift and the additional number of leads you successfully converted with experiments

Compare the variant with the control group at ease

By focusing on these essential metrics, you can easily track the success of your experiments, make data-driven decisions, and optimize your strategies for maximum effectiveness.

Step 4: Optimize the Experiments

Experimentation is not a one-time activity but an ongoing and iterative process that aims to optimize the customer experience and increase conversion rates.

It involves continually analyzing customer data and behavior to understand their preferences, needs, and interests.

This level of testing enhances the customer experience by making it more relevant and engaging, thus fostering loyalty and increasing customer satisfaction.

Over time, as new data becomes available and customer preferences evolve, testing strategies need to be adjusted and refined to maintain their effectiveness.

In essence, experimentation is a continuous cycle of learning, adapting, and improving to deliver a superior customer experience.

The Bottom Line

To sum up, this experimentation guide for Contentstack provides a comprehensive roadmap to creating A/B tests inside Contentstack that boost conversions and revenue.

With Ninetailed’s seamless integration and Contentstack’s composable platform, creating experiments becomes as straightforward as adding new content entries. Moreover, it eliminates workflow silos, enabling all tasks to be accomplished within one workflow. By integrating Ninetailed with Contentstack, you can:

Define your audience segments

Easily connect your customer data with integrations

Create experiments just like adding new content entries

Eliminate workflow silos by doing everything inside Contentstack

Understand the impact of experiments on conversions and revenue

Optimize what works based on the results

Get in touch to learn how Ninetailed personalization, experimentation, and insights works inside your CMS