- Composable Architecture,

- Personalization

Integrating Personalization Into High Performance Stacks

This article is from a collaboration with our friends over at hygraph - the federated content platform.

Modern Applications Require Modern Frameworks

The modern app development landscape has seen a tectonic shift over the past few years. With constant innovations towards pushing the boundaries on digital experiences, the capabilities of digital interactions are very different today. Interactive web apps, blazing-fast e-commerce experiences, and omnichannel consistency across multiple platforms aren’t a novelty anymore, they’re expected. While much of this has to do with the advancements in web technologies and frameworks, the increasing customer expectations are equally to be credited. People expect faster, they expect better.

Evolving customer expectations at a global scale mean companies have an opportunity to re-evaluate if their existing setup continues to meet their needs.

Maintaining, upgrading, and tweaking legacy systems is a labor-intensive process, requiring extensive reworking of architectures and reworking the deprecated modules to either find a similar solution or find a difficult workaround.

Proceeding with legacy systems that each service one platform or purpose, will leave many organizations with the bitter realization that they are no longer competitive in today's omnichannel world. Teams may be left behind on being the market leader for modern digital experiences, betting on a system that is only intended for web-first development.

To provide these customers with their expected experience and minimize the risk of churn, or worse, disinterest, companies need to constantly innovate and provide better experiences made possible by modern and high-performant frameworks like Next.js, Go, and Redux, to name a few. In fact, the meteoric rise of JAMstack (JavaScript, APIs, and Markup) is directly related to the better performance, higher security, and easier scaling than it provides.

Customer experiences have come a long way from the novelty that eBay provided in the late 90s, and web technologies have caught up to match that. For proof, one only needs to see The Evolution of the Web to understand what different technologies need to interact with one another to realize these experiences.

All the benefits that these technologies provide are great, there’s no denying that. But a speedy page load is only a part of what matters. What happens when companies expand the capabilities of their projects by adding analytics, testing, personalization, CRM, search, localization, and on and on? How can these modern frameworks push forwards in ensuring that features and functionality don’t cannibalize each other at the expense of performance and UX? How can a company provide all the capabilities of an Adobe Marketing Cloud or an SAP without suffering the costs, laggard performance, overheads, and scaling pains that come with them?

Building Composable Architectures That Scale

In a monolith, all of the functionality would be handled by one tool or service, commonly available as “suites”, where each function would be highly dependent on the health of the system.

Teams are locked into a narrow ecosystem of templates, frameworks, languages, reporting, etc. Those wishing to try something new may be met with resistance because it is not clear how these changes will affect all of the other functions or the time it takes to develop new workflows securely is simply not worth it. Any updates or patches require an upgrade to the entire system at once, and breaking changes that aren’t mitigated expertly could result in the entire infrastructure failing. Aside from the expenses associated with maintaining that infrastructure, this “downtime” can be a very tangible loss of business.

It is nearly impossible to swap out specific services or functionality with a different provider and thus teams must use the various services provided by the monolith or risk using an unsustainable workaround. When it comes to the regular updates, these can be time-consuming and result in downtimes when they are not completed. Versions of the same product can require massive restructuring and time-intensive work to implement with some features not being carried over from previous versions.

What if there was a better way to keep the best functionality of what those suites offered, and not only match them but enhance them? All with lower costs, easier scalability, better control, and higher performance? Enter composable architectures.

A Composable Architecture is a more commercial abstraction of the “microservices” approach from development. This approach is what enables modernizing companies to implement a modular DXP approach. Using a modular DXP allows teams to choose tools that meet their specific needs and can easily be integrated into the workflows of the overall team. Instead of teams being weighed down by functionality that is not optimized for their use case, teams are able to evaluate the best tools for their use case and easily connect them via API.

These result in the foundation of the digital product being highly performant, using modern frameworks as mentioned below, without sacrificing that performance for added functionality like personalization, analytics, content, commerce, payments, etc. Those services now deploy independently, communicate with the main product via API, are cloud-hosted solutions, and are best-of-breed platforms for the use-cases they support.

Just with a simple change in direction and approach, companies are now able to sustain and innovate on all their business-critical needs, and unlock operational efficiencies by using best-of-breed services that build on top of their business model. All without the overheads of hosting, security, scalability, and vendor lock-in.

All of this sounds great, and all these services can be highly performant. But isn’t personalization the opposite? Isn’t personalization applied on the fly at the last minute? Won’t integrating this into a stack optimized for performance just slow everything down and result in wasted efforts because Marie will have to wait for 3-5 seconds until we know she’s Marie, so that the landing page can say “Hi, Marie”?

Not when applied correctly.

Let’s Talk About Personalization

Personalization has come a long way from AB Testing CTA colors and labels. More accurately defined as “Experience Optimization” today, personalization covers everything from landing page variations to individualized customer journeys across channels and platforms. Personalization tools are also not the standalone SaaS products they once were that threw on a frontend editor on a website to modify the content for x% of visitors. They’re now sophisticated engines making real-time calculations and deeply integrating with CDPs, Analytics, CMSs, and other DXP “components” to ensure each individual is getting an optimized experience when interacting with the digital product they’re on.

Performance has always been a major concern of personalization efforts; client-side, server-side, on-the-edge, branch testing, and real-time segmentation have gone from being jargon to serious considerations when specifying the use-case for personalization. With every millisecond of performance loss leading to very tangible impacts on revenue, making the right decision on implementing personalization is important.

When implementing personalization without sacrificing performance, I’d argue that there are two primary considerations. Firstly, the level of personalization needed (not wanted), and secondly, the intent.

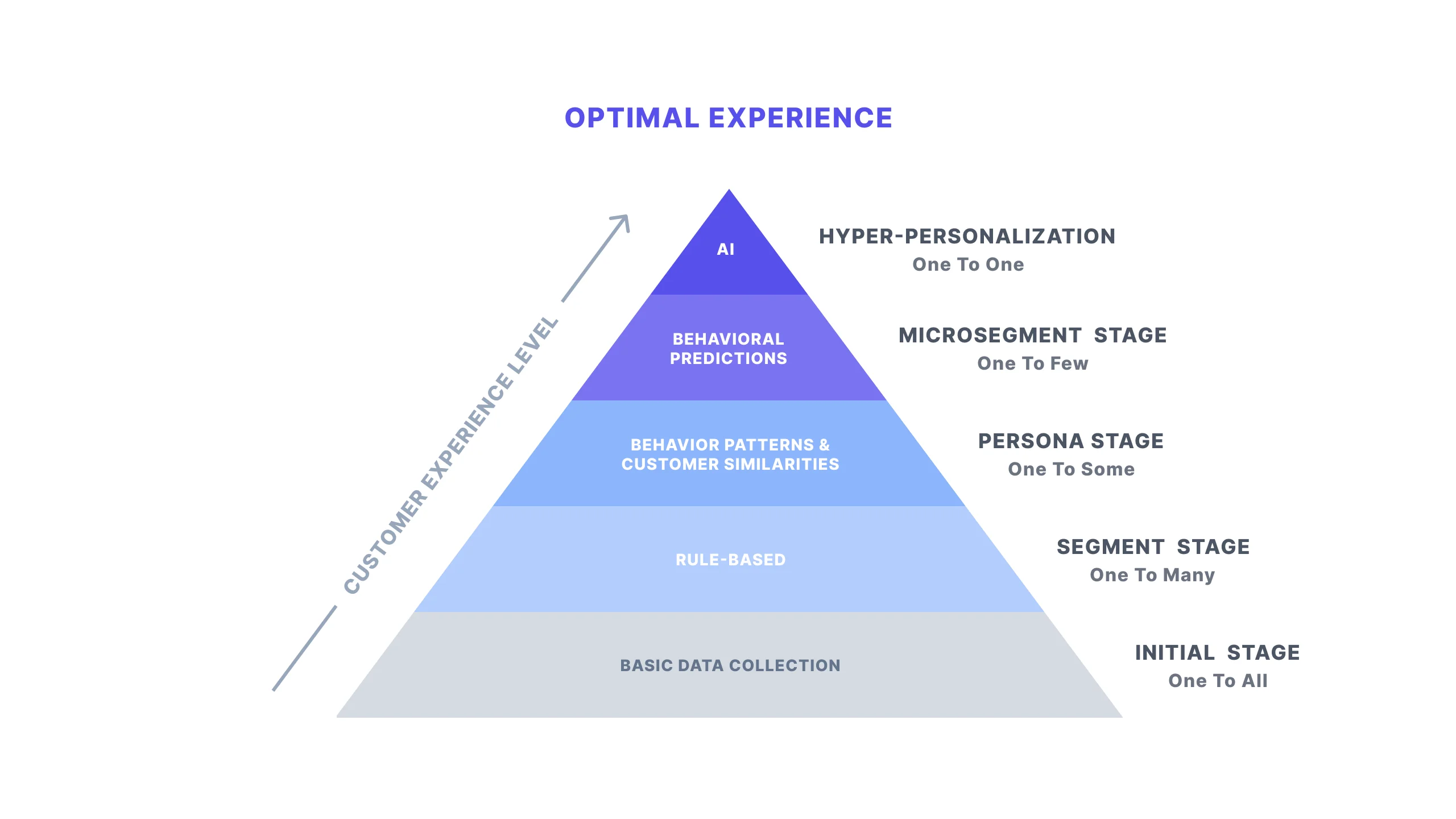

To achieve the optimal customer experience, companies need to embrace a personalization maturity journey with different stages which combine data, segmentation, optimization, and insight generation.

When looking at the levels of personalization based on collectable data, a company has to justify which level of personalization their business warrants. For a high-volume e-commerce company, it’s counterintuitive to only collect and act upon basic metrics like location, traffic sources, or campaigns. They’d need a lot more preferential data. Similarly, for a small consultancy with a corporate website, I’d argue that degraded performance aside, it’s none of their business collecting event-based metrics or micro-interactions even if they can.

The next consideration is the intent of that personalization.

Is the data collected going to be used to enhance the user experience (think Amazon’s product recommendation based on viewing history)? Then you probably need to collect a lot more and ensure that the heavy lifting is done on your end and by your CDP so as to not affect the end user’s experience.

Once the intent and the amount of data required are defined, it’s simpler to understand how personalization would work in your stack. Depending on the use case, you’re now in a better position to look for tools and engines that help you reach those business goals without impacting your stack overall.

To unlock the true power of personalization you need to combine consumer attributes with their motivations, as well as their intent, define motivation-based personas and use a combination of data points to target the personalization audience.

You’re now in a better position to judge whether you need full-stack options, branch-level testing, or if your business goals support the ability to implement this on a modern framework with negligible impact on your performance - personalization on the edge for your JAMstack apps.

Building Your Perfect Architecture

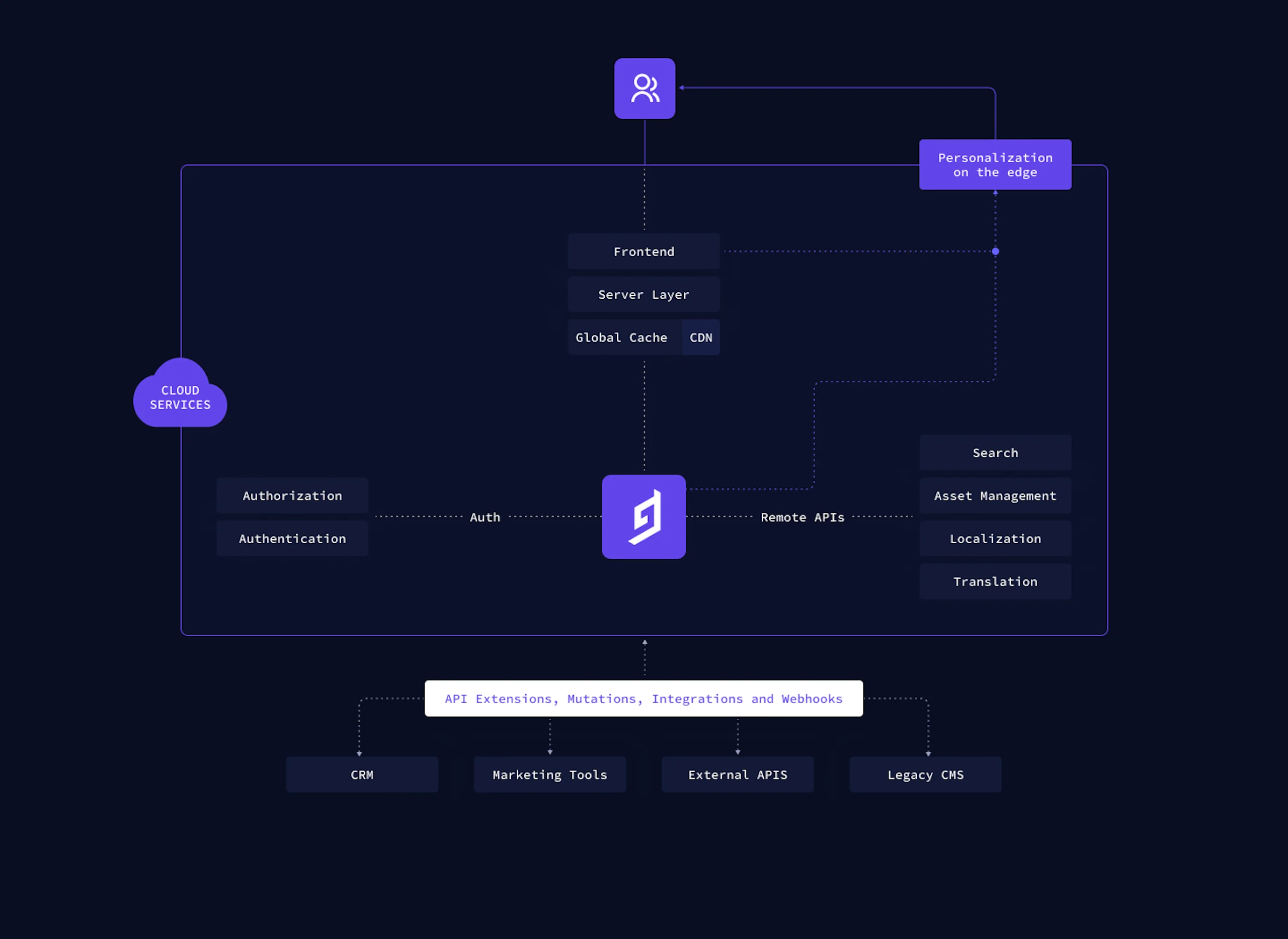

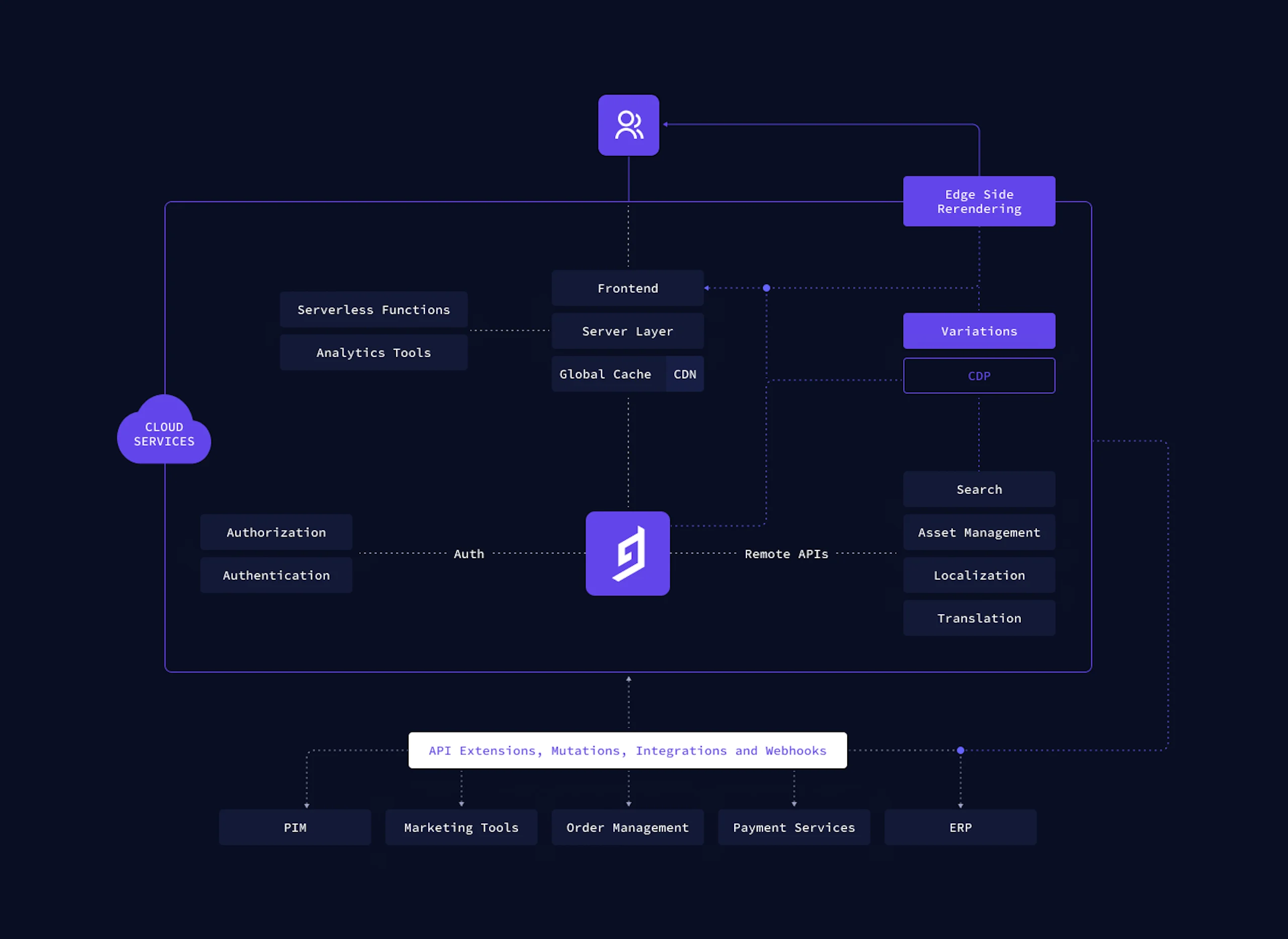

To better visualize how you can implement personalization in a more nuanced way when building composable architectures, here are two examples that would highlight the components coming together, and where personalization would fit in.

Modern Website or Web-App Using JAMstack and Headless Platforms

High-Volume App Focusing on Individualized User Experience

Learn everything you need to know about MACH architecture